full image used as stand-in for “debaters have capabilities the judge does not”

"an image is more likely to contain pixels that convincingly demonstrate truth than to contain pixels that convincingly demonstrate a lie. 6 pixels, 50% honest 50% malicious, are much better 6 random pixels. If judge were a human capable of reasoning about a few arguments at a time but not sifting through the huge set of all possible arguments, optimal play in debate can (we hope) reward debating agents for doing the sifting for us even if we lack an a priori mechanism for distinguishing good arguments from bad."

visualization idea: “train separate network to predict original image from sparse mask” - as “what judge might be thinking”

single-pixel debate game (for humans) - https://github.com/openai/pixel

highlighted: testing that human judges tend to reach good conclusions even on debates where they're biased

debate doesn't address adversarial examples, distributional shift

debate could fail to be competitive with cheaper/less safe methods

“Even for weaker systems that humans can supervise, debate could make the alignment task easier by reducing the sample complexity required to capture goals below the sample complexity required for strong performance at a task.”

"One approach to specifying complex goals asks humans to judge during training which agent behaviors are safe and useful, but this approach can fail if task is too complicated for a human to directly judge."

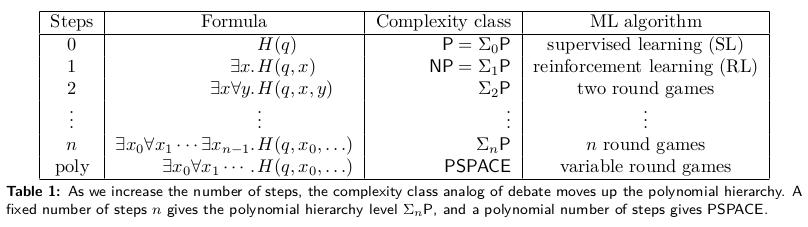

"debate with optimal play can answer any question in PSPACE given polynomial time judges (direct judging answers only NP questions), corresponding to aligned agents exponentially smarter than the judge."

- simple ("P"): human can perform the task, we can just imitate

- harder ("NP"): human can evaluate but not perform (e.g., backflip in weird action space))

- analogy: P vs. NP: human can compute answer vs. human can verify given a certificate

- human might be unable to judge whether an explained answer/behavior is correct - might be hard to understand without help, …

eventually debate would be in natural language

if AlphaZero shows us a move, we can't easily judge whether it's good or not. but we can ask another copy for a countermove, etc. →

2022-04-17 22:12

started reading today but didn't finish

ideal DEBATE continues until one debater gives up - i.e., does not have anything to add

debate game: both agents state answers, then take turns making statements, then judge judges who wins. human will decide based on whatever human language instructions, like “who said the most useful true thing”.

train time: debate game is actually played

test time: just take the answers the debaters commit to

“training is stable around the Nash equilibria”

“agents are assumed to be using the best arguments available”

seeing “alphago chooses move X often” is evidence it's a good move

reference for the computation stuff: Michael Sipser. Introduction to the Theory of Computation.

"Environment interaction: If we want a system to take actions that affect the environment such as operating a robot, the desired output is a sequence of actions a0 , a1 , . . . where each action can only be computed once the previous action is taken. In this case we need one debate per action, though at test time we can again stop once the actions are computed."

"Joint training of agents and judge together would be particularly bad as a test of debate, since it assumes knowledge of the truth at game time which is exactly what we hope to do without."

debate vs. amplification:

Answerer: takes questions Q, answers A

Questioner: takes question Q, gives subquestions q_k

Checker: takes (Q, q_k, a_k) to final answer A

to make amplification more like debate (for self-play): train questioner adversarially, to make inconsistencies in answerer

e.g.:

Answerer: Q1 → A1

Questioner: Q1 → Q2 Q3 Q4

Answerer: Q2 → A2, Q3 → A3, Q4 → A4

Checker: A2 A3 A4 → A5, A1 ≠ A5